Demystifying Loss Functions in Image Matte Segmentation

A Comprehensive Guide to Choosing the Right Loss Function for Precise Image Matte Segmentation

Introduction

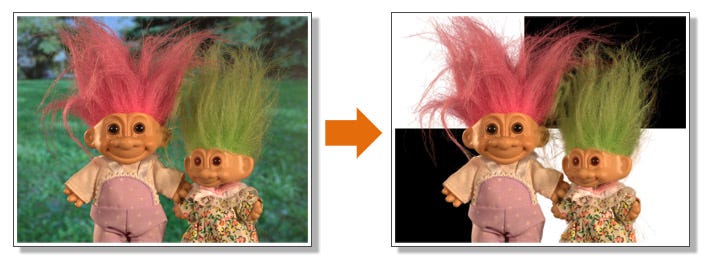

Image matte segmentation is a fundamental task in computer vision, with applications ranging from photo editing to virtual reality. At its core, the goal is to separate an object from its background, creating a clean and precise boundary, often represented as an alpha matte. Achieving this requires training a neural network, and one crucial aspect of training is the choice of loss functions.

In this blog post, we'll explore various loss functions commonly used in image matte segmentation. We'll break down their concepts and provide examples to help you understand when and why you might choose one over the others.

1. Dice Loss

Dice Loss is a popular choice for image segmentation tasks, including matte segmentation. It measures the similarity between the predicted and ground truth masks using the Dice coefficient. This coefficient calculates the intersection over union (IoU) between the two masks.

The formula for Dice Loss is as follows:

P is the predicted mask.

G is the ground truth mask.

Example: Imagine you have a predicted mask and a ground truth mask for a matte segmentation task. Dice Loss quantifies how well they overlap. A Dice Loss of 0 means no overlap, while a score of 1 indicates a perfect match.

2. Focal Loss

Focal Loss is designed to address class imbalance, which is common in segmentation tasks. It assigns higher weights to hard-to-classify pixels, making it particularly useful when dealing with imbalanced foreground and background regions.

The formula for Focal Loss includes a term that downweights well-classified examples and focuses on the challenging ones:

pt is the predicted probability.

γ is a hyperparameter that controls the degree of focusing.

Example: When dealing with an imbalanced dataset in matte segmentation, Focal Loss can help the model pay more attention to the intricate details of the object boundary.

3. Lovasz Loss

Lovasz Loss aims to optimize for boundary pixels, ensuring that the predicted alpha matte has sharp and accurate object boundaries. It's valuable when you want to emphasize boundary preservation in your matte.

The key concept behind Lovasz Loss is based on the submodular property, but the exact formula can be complex. In essence, it rewards models for having the correct order of pixel predictions along the boundary.

Example: Lovasz Loss is ideal when you need precise object boundaries, like when extracting hair or fur in an image.

4. Jaccard Loss

Jaccard Loss, also known as the Intersection over Union (IoU) Loss, measures the IoU between the predicted and ground truth masks. It's similar to Dice Loss but provides an alternative way to optimize for mask overlap.

The formula for Jaccard Loss is similar to that of Dice Loss:

Example: If you care about optimizing for mask overlap and prefer a different formulation from Dice Loss, Jaccard Loss is a suitable choice.

5. Alpha Loss

Alpha Loss is a custom loss function specifically designed for alpha matting tasks. It's tailored to optimize the prediction of alpha values in the matte, which is essential for accurate object extraction.

The exact formula may vary, but it typically penalizes differences between predicted and ground truth alpha values while ensuring smoothness.

Example: When your primary goal is accurate alpha prediction, such as in portrait editing or green screen removal, Alpha Loss is a natural choice.

Conclusion

In image matte segmentation, selecting the right loss function is crucial for training a model that meets your specific needs. The choice often depends on your dataset, the desired output quality, and the trade-offs you are willing to make.

Experimentation is key. Try different combinations of these loss functions, adjust their weights, and observe how they impact your model's performance. By understanding the concepts behind each loss function and their practical implications, you'll be better equipped to tackle image matte segmentation tasks effectively.

Remember, the "best" loss function varies from one project to another, so keep experimenting and refining your approach to achieve the results you desire.

Happy segmenting!